As a 30-year-old woman, I can do a lot of things. I can vote. I can drink. I can drop hundreds of dollars on therapy to help me work through my deeply self-destructive attachment style and still let men treat me like shit. But there's one thing I recently learned that I can't do -- put a naked woman on the internet.

Let me rewind a bit.

It was the last week of April, and I was prepping a print article, "Making Work in Her Own Image," for publication on our site. It was a profile of Rio Sofia, a trans woman whose multidisciplinary nude self-portraiture explores the coercive side of gender. Far from the empowerment narratives we're used to seeing in this post-"Transgender Tipping Point" age of visibility, the figures in her photographs -- all played by Sofia herself -- are at odds with one another, mixing pleasure with despair across a number of bondage scenarios.

I found the images from Sofia's Forced Womanhood! series, named after the forced-fem erotica magazine, refreshing as well as challenging. I like seeing images of bodies like mine, especially ones that were created by another trans woman. Usually when I see an image of a trans woman's body, it's been filtered through the gaze of whichever cisgender editor, photographer, director, or producer has decided to bring that image to life -- my Instagram feed notwithstanding -- and that's if I see an image of a trans woman's body at all. Entertainment media and commercial propaganda are kind to no woman, but there's a specific kind of cruelty they save for those of us who are darker skinned, disabled, fat, or trans -- a cruelty that's compounded even further for anyone who's more than one of those things. It's the cruelty of absence, of telling us that we don't exist despite however many pounds of evidence we have to the contrary.

Flipping through the pages of our May issue, I felt proud of the work that I'd done -- of the profile that I'd written and the imagery I'd brought to the pages of one of the longest-running LGBTQ+ publications still in existence. I was proud to be a trans journalist profiling a trans subject and to have that profile edited by a trans editor, Out Executive Editor Raquel Willis -- an all-trans editorial process that I'd only ever experienced a couple times before with Muna Mire at Mask Magazine and Meredith Talusan at them. I was happy to have played a role in Out's print magazine publishing the images contained in my article. Knowing how tightly controlled our representation truly is, I felt really charged up knowing that this image of a trans woman - gagged, and bound to a chair with her genitals visibly locked in a chastity device -- would soon find its way into Barnes & Nobles nationwide. Who would end up seeing it? What might it awaken in them? It was all so exhilarating to think about.

Cut to the morning of April 23, when I was building the online version of the profile. Once I'd completed the text in the backend of our content management system, I popped over to the Slack channel where we make requests to Pride Media's art department. I dropped the same imagery from the print article into the channel -- a self-portrait of Rio where she's bound and gagged, wearing a chastity device and a pair of prosthetic breasts, along with a collage of forced-fem imagery that the artist had made -- and requested that someone in the art department resize them so that I could embed them in the body of the piece.

There was just one problem: I couldn't use the images.

As I learned from one of our art staffers, who declined to be interviewed on the record, it was all because of Google. Pride Media -- the company that owns Out, The Advocate, Pride.com, and Plus -- has a business relationship with Google Ads, which basically works as follows: Random advertisers buy advertising spots from Google Ads, and Google Ads pays websites -- like Out -- to run those spots on their various pages using a program called Google AdSense. The program has a strict policy on prohibited content and will pull ads from any page that contains "adult themes, including sex, violence, vulgarity, or other distasteful depictions of children or popular children's characters, that are unsuitable for a general audience." What's a "general audience"? That's for Google to decide. And since Google has decided that it's inappropriate for a general audience to see a trans woman's naked body -- or anyone else's, for that matter -- Out is unable to publish an image of a trans woman's naked body, lest Google demonetize that article in retaliation. How much money would we lose from a single demonetized article? That I can't say. I was unable to confirm the amount with anyone on the record by the time of publication.

I texted Sofia the bad news. She was disappointed but not discouraged, so we tried to come up with a solution she'd be into that still followed Google AdSense policy. I suggested using a different Forced Womanhood! portrait, but all of the ones she felt accurately represented the series contained nudity, which, again, is a big no-no for Google AdSense. So, we discussed censoring the images. Sofia suggested big red bars yelling "CENSORED" in all-white caps -- basically, if Out was going to censor her artwork, she wanted to aestheticize the censorship as much as possible. Then, we tried a subtler, pixelated blurring. Sofia's mood began to dip as she saw her artwork altered before her eyes. Eventually, by her request, we decided to use an image from the portrait series where she's fully clothed -- one that neither of us would've chosen to represent her work.

"I was really excited [to have my artwork featured in Out]," Sofia told me in a later interview. "It was a huge opportunity. Since making the work, I didn't have very many chances to get the work out there. I want to share my ideas with other queer and trans people, and Out has such a large platform with such a huge amount of visibility."

Realizing I wouldn't be able to publish the profile as it ought to be was demoralizing on a creative and professional level. It also felt personal. I'd just spent about seven hours trying to argue that a body like mine should be seen, and I lost. (I couldn't even publish an image of the Sleeping Hermaphroditus sculpture pictured above where the statue's breasts and genitals are exposed.) I was frustrated, to say the least.

That frustration multiplied after the article was published two days later. Out's Instagram account, @outmagazine, promoted the profile on April 25, only a few hours after we'd published it on our site. Our social editor had used a cropped version of the Forced Womanhood! portrait we'd been able to publish in print but not online, cutting out everything below Sofia's waist but leaving the breast forms and jawbreaker gag bits intact. The post racked up over 2,000 likes and then disappeared -- deleted, our social editor said, for violating Instagram's nudity policy.

"This image was correctly deleted," an Instagram spokesperson later told me, adding that the image wasn't removed due to nudity but because it featured a sex toy. "Under our policies we do not allow content that depicts the use of sex toys, irrespective of whether the image is art."

Instagram -- or rather Facebook, its parent company -- has a restrictive adult content policy that prohibits images of sexual activity, a "visible anus," the use of sex toys, and "real nude adults." "Uncovered female nipples" are also prohibited, unless it's a picture of a woman breastfeeding, giving birth, or doing something health-related, like raising awareness about breast cancer or gender-affirming surgeries. It's a policy I first came into contact with about 11 months into my transition. That was the first time I remember Instagram deleting one of my topless photos -- i.e., the first time the algorithm read me as a woman. (Instagram uses "a combination of AI and human review to proactively find and remove content that violates our policies," a spokesperson for the company confirmed.) But it's a policy that's far from new for a lot of Instagram users.

Sex workers who use Instagram to promote their work -- and even those who just use it to post cute pics or whatever -- are quite familiar with the platform's adult content policy. Instagram has often used that policy as justification for not just deleting sex workers' photos, but shadowbanning and deleting their entire accounts. "Over the course of the last several months, almost 200 adult performers have had their Instagram accounts terminated without explanation," wrote James Felton, legal counsel for the Adult Performers Actors Guild, in an open letter to Facebook this past April. "We have quickly found that most sex worker accounts, porn star accounts, and adult model accounts are deleted simply for existing!" APAG president Alana Evans said in a statement released that same month. "Many have been deleted for bikini photos, workout videos, and less, while celebrities like Kim Kardashian, Miley Cyrus, and countless other Instagram Verified celebrities, post images on a regular basis with full nudity, exposed nipples, bare backside, and more." The protest went offline in June, when dozens picketed outside of Instagram's Silicon Valley headquarters as part of a demonstration organized by the APAG.

Instagram's policy is very similar to the one Google AdSense uses, albeit a bit more detailed, but something about it stood out to me. There's a line toward the beginning of the policy that explains its rationale: "...we default to removing sexual imagery to prevent the sharing of non-consensual or underage content." Did you catch that? There's a clever sleight of hand happening there that's easy to miss if you're not paying attention. By mentioning "underage content" in the same sentence as "sexual imagery," Facebook is able to tie the two together, as if the very existence of sexual imagery itself somehow encourages or promotes the sharing of underage content online. It's not unlike how Tumblr instituted policy changes after discovering child pornography on its servers that effectively drove adult content and sex workers off its platform. It's also not unlike the efforts of prosecutors, lawmakers, and anti-trafficking advocates to conflate the threat of sexual trafficking -- particularly the sexual trafficking of minors -- with sex work itself. Their efforts have led to the shutdown of affordable, accessible escorting sites like Rentboy and Backpage, as well as Craigslist's Personals section. Similar efforts by lawmakers have led to the passage of the Allow States and Victims to Fight Online Sex Trafficking Act and the Stop Enabling Sex Traffickers Act, a bill package also known as FOSTA-SESTA that passed thanks to yes votes from 13 of our current Democratic presidential candidates including Elizabeth Warren, Beto O'Rourke, Bernie Sanders, and Kamala Harris. (Harris has a particularly hostile record on sex work, having led the legal fight against Backpage during her tenure as California's Attorney General.) Sex workers have reportedly been leaving Twitter en masse since FOSTA-SESTA's passage, though it should be noted that platforms like Twitter and Instagram have been hostile to such users for years.

Content policies like those instituted by Facebook and Google target sex workers, hitting the most structurally marginalized the hardest, but their impact reaches further than many of us realize -- certainly further than I ever realized before I tried to post a photo of a naked trans woman on our site. Because Out has a mutually beneficial business relationship with Google, what we publish must follow Google's rules. (This is a problem of our own making, of course. We could always stop monetizing our articles through Google AdSense. My attempts to discuss this on the record with someone at Pride Media were unsuccessful.) Because we use Instagram to promote our content, what we promote must follow its parent company, Facebook's, rules. Neither of these tech giants allows the kind of nudity featured in Rio Sofia's portraits, so Out will shy away from publishing work like hers: bold, sexual, unconcerned with respectability or playing by capital-C Community rules.

As I wrote in my profile of Sofia, transfeminine people rarely control the making of our own imagery. From movies and TV to the Western art canon, our representation has, historically speaking, been a matter of cis cultural production. That's begun to change in recent years thanks to camera phones and social media, which offer trans women a truly unprecedented ability to create our own images and share those images with the world. But that distribution becomes impossible when our tool for doing so -- i.e., Instagram or Tumblr -- forbids it. By deciding which images we can post, tech giants like Facebook and Google effectively decide which stories we can tell and how we tell them, something that could have disastrous consequences for journalism, queer or otherwise, if left unchecked. As journalists, our work has the potential to speak truth to power, exposing its secrets to an unsuspecting public. But we can just as easily do nothing more than amplify the voices of the powerful while claiming that our biased perspective is objective or neutral. "Neutrality isn't real," wrote journalist Lewis Wallace in a much-circulated Medium essay that led to his firing from American Public Media's Marketplace. "Neutrality is impossible for me, and you should admit that it is for you, too. As a member of a marginalized community (I am transgender), I've never had the opportunity to pretend I can be 'neutral.'" It's a dynamic wherein a dominant group is deciding the norms for us all, even when those norms require our silence to function. I recognize this same dynamic when I look back at what happened with the images in my profile.

I raised my concerns about censoring LGBTQ+ reporting and storytelling with a Google spokesperson, who tried to assuage them by telling me that they "enforce our advertising policies consistently across all publishers in our ad network" and that they "review content from our LGBTQ+ publishers against the same standards as all other publishers." The Instagram spokesperson I talked to wasn't able to provide much comfort either. "We want Instagram to be a place where people can express themselves, but we also have a responsibility to keep people safe," the spokesperson said. "We try to write policies that adequately balance freedom of expression and safety, but doing this for a community of a billion people from all corners of the world will always be challenging."

Google, Twitter, Facebook, and Tumblr don't control every corner of the internet. Switter, for example, is an explicitly sex work-friendly social media platform that sidesteps American law by hosting itself internationally. But think about how much of your screen time is filtered through one of these brands and their adult content policies -- or through Amazon or Apple, which both have restrictive policies of their own, which extends to their app stores. (Ever wonder why there's no OnlyFans iPhone app?) Think about how much more of your screen time might be filtered through such policies in the future as our outdated antitrust laws fail to prevent these companies from further consolidating their power to the point of monopoly.

I don't expect anything from a corporation -- whose only motive, after all, is profit -- but I do find the situation depressing. Infantilizing, even. I'm 30 years old, and I want to put a naked woman on the internet (with her consent, of course). Am I not old enough to make that decision for myself?

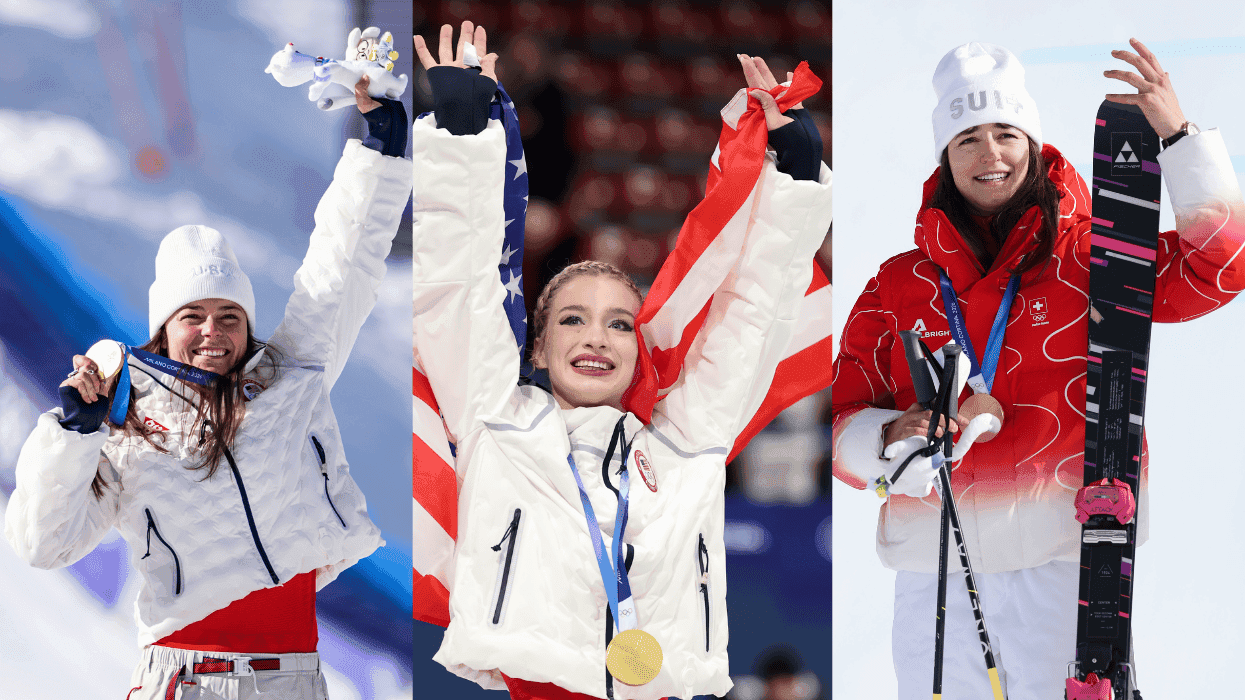

This article appears in Out's August 2019 issue celebrating the body. The cover features South African Olympian Caster Semenya. To read more, grab your own copy of the issue on Kindle, Nook, Zinio or (newly) Apple News+ today. Preview more of the issue here and click here to subscribe.

I watched the Kid Rock Turning Point USA halftime show so you don't have to

Opinion: "I have no problem with lip syncing, but you'd think the side that hates drag queens so much would have a little more shame about it," writes Ryan Adamczeski.